Understanding how to debug SQL queries in MySQL database is essential for maintaining optimal database performance and ensuring accurate data retrieval. Whether you are troubleshooting slow queries or resolving execution errors, mastering the debugging process can significantly enhance your ability to manage and optimize your database systems effectively.

This guide provides a comprehensive overview of techniques and tools, including analyzing execution plans with EXPLAIN, interpreting error logs, employing profiling features, and utilizing GUI-based tools like MySQL Workbench. By following these methods, you can identify bottlenecks, resolve issues efficiently, and improve the overall performance of your SQL queries.

Understanding the Basics of SQL Query Debugging

Debugging SQL queries is a fundamental skill for database administrators and developers working with MySQL. It involves systematically analyzing and resolving issues that prevent queries from executing correctly or efficiently. An effective debugging process ensures data integrity, optimizes performance, and minimizes downtime, which are critical components in managing reliable database systems.

At its core, SQL query debugging encompasses identifying errors, understanding query execution plans, and optimizing queries for better performance. Common issues encountered during SQL query execution include syntax errors, logical errors, performance bottlenecks, and unexpected results. Recognizing these problems early allows for targeted troubleshooting and efficient resolution, ultimately leading to more robust database applications.

Common Issues Encountered in SQL Query Execution

Many challenges can arise when executing SQL queries in MySQL, often stemming from either incorrect syntax, inefficient query design, or database environment issues. Addressing these issues requires a clear understanding of typical problems faced by developers and database administrators.

- Syntax Errors: Mistakes in query syntax such as misspelled s, missing commas, or incorrect clause placement can cause immediate failure. For instance, omitting the WHERE in a DELETE statement can lead to unintended data deletion.

- Logical Errors: These occur when the query runs without syntax issues but produces incorrect or unexpected results. An example is using an incorrect JOIN condition that results in incomplete or duplicated data.

- Performance Bottlenecks: Queries that scan entire tables or lack proper indexing can execute slowly. This often happens in large databases where inefficient joins or subqueries are used without considering data distribution.

- Resource Limitations: Insufficient server resources such as memory or CPU can cause query failures or slowdowns, especially during complex operations like aggregations or large data updates.

- Data Inconsistencies: Inconsistent or corrupted data can lead to inaccurate query results, emphasizing the importance of maintaining data integrity and enforcing proper constraints.

Typical Causes of Query Failures and Inefficiencies

Effective debugging begins with understanding the root causes of query failures and inefficiencies. These causes often relate to both query design and the underlying database architecture.

| Cause | Description |

|---|---|

| Missing Indexes | Without proper indexing, MySQL may perform full table scans, leading to slow query execution, especially on large datasets. |

| Improper Join Conditions | Incorrect or poorly written JOIN clauses can produce Cartesian products or incomplete data, affecting accuracy and performance. |

| Overly Complex Queries | Nested subqueries or multiple joins can increase processing time, especially if not optimized or rewritten for efficiency. |

| Unnecessary Data Retrieval | Selecting more columns or rows than necessary increases resource usage. Using SELECT

|

| Incorrect Use of Functions | Applying functions improperly or unnecessarily (e.g., in WHERE clauses) can hinder index usage and slow down execution. |

| Transaction Locks | Long-running transactions or locking issues can block other queries, causing delays or failures. |

Understanding these fundamental causes is essential for developing effective debugging strategies, allowing for targeted improvements rather than trial-and-error approaches.

Using MySQL EXPLAIN and DESCRIBE Statements

Effectively debugging SQL queries in MySQL involves understanding how the database engine processes your queries. Two vital tools in this process are the EXPLAIN and DESCRIBE statements. These commands provide valuable insights into query execution plans and table structures, enabling developers to optimize performance and troubleshoot issues with precision. Mastering their use helps in identifying bottlenecks, understanding table relationships, and clarifying how indexes are utilized during query execution.

EXPLAIN offers a detailed view of how MySQL executes a query, including information about table access methods, indexes used, and join types.

DESCRIBE reveals the structure of a table, such as column types, key constraints, and nullability, which directly impact query performance.

Utilizing EXPLAIN to Analyze Query Execution Plans

Analyzing the execution plan of a query using EXPLAIN allows developers to visualize how MySQL retrieves data, which is crucial for pinpointing inefficiencies. By examining the output, you can determine if indexes are being used effectively, whether full table scans are occurring, or if join operations are optimized. This information guides adjustments in query design and indexing strategies, leading to faster, more efficient database interactions.

To illustrate this, consider a sample SELECT statement retrieving customer orders:

EXPLAIN SELECT customers.name, orders.order_date

FROM customers

JOIN orders ON customers.id = orders.customer_id

WHERE orders.order_date > '2023-01-01';The output from EXPLAIN is typically displayed as a table with columns such as id, select_type, table, type, possible_keys, key, key_len, ref, rows, and Extra. Here’s a structured example of interpreting this output:

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | Extra |

|---|---|---|---|---|---|---|---|---|---|

| 1 | PRIMARY | customers | ALL | PRIMARY | 1000 | Using where | |||

| 1 | PRIMARY | orders | ref | customer_id | customer_id | 4 | customers.id | 150 | Using where |

In this example, the table ‘customers’ is being accessed via a full table scan (type ‘ALL’), which can be inefficient for large datasets. The ‘orders’ table uses an index on ‘customer_id’ (‘ref’ type), indicating a more optimized index utilization. Recognizing such details helps identify where query performance can be improved by adding or modifying indexes or rewriting queries to reduce full scans.

Using DESCRIBE to Understand Table Structures

DESCRIBE provides a detailed layout of a table’s structure, revealing information that impacts query performance and correctness. Understanding the schema allows developers to optimize queries, especially by knowing which columns are indexed, their data types, and whether they are nullable. This understanding helps in designing queries that leverage indexes effectively and avoid unnecessary table scans or conversions.

For example, executing DESCRIBE on a ‘products’ table might produce the following output:

| Field | Type | Null | Key | Default | Extra |

|---|---|---|---|---|---|

| product_id | INT(11) | NO | PRI | NULL | auto_increment |

| product_name | VARCHAR(255) | NO | |||

| category_id | INT(11) | YES | MUL | NULL |

This table structure indicates that ‘product_id’ is a primary key with auto-increment, ensuring uniqueness and fast retrieval. The ‘category_id’ may be used as a foreign key, and the presence of the ‘MUL’ key suggests an index that could be used for join operations or filtering. Knowing this structure allows developers to craft queries that efficiently utilize these indexes, such as filtering by ‘category_id’ or retrieving products by ‘product_id’.

Enabling and Interpreting MySQL Error Logs

Effectively debugging SQL queries in MySQL requires access to detailed error logs that capture server events and query-related issues. Properly enabling and understanding these logs can significantly streamline the troubleshooting process, helping identify the root causes of errors and optimize query performance.

Utilizing MySQL error logs allows database administrators and developers to monitor server health, detect recurring problems, and analyze specific query failures. Accurate interpretation of log entries provides insights into syntax errors, resource constraints, or configuration issues, thus enabling targeted fixes and improved database reliability.

Locating and Enabling MySQL Error Logs

Before interpreting error log entries, it is essential to ensure that MySQL error logging is enabled and the log files are accessible. Several steps are involved in locating and activating these logs, which may vary depending on the operating system and MySQL version in use.

- Check the current MySQL configuration to determine if error logging is active. This can be done by executing the following command within the MySQL client:

- If the log is not enabled, locate the configuration file, typically my.cnf (Linux) or my.ini (Windows). Open this file with administrative privileges.

- Within the configuration file, find or add the parameter for error logging. For example:

- Ensure the directory exists and MySQL has write permission to the log file location.

- Restart the MySQL service to apply the changes. On Linux systems, use a command such as:

- Verify that the log file is being populated by intentionally causing an error or by checking the file after MySQL operations.

SHOW VARIABLES LIKE 'log_error';

log_error = /var/log/mysql/error.log

This specifies the path where MySQL will write error logs.

sudo systemctl restart mysql

Proper configuration ensures that MySQL captures relevant error events, which can be invaluable during troubleshooting sessions.

Interpreting Error Log Entries for Query Issues

Understanding the entries within MySQL error logs is crucial for diagnosing and resolving query issues. Logs typically contain timestamped entries, error codes, and descriptive messages that point to specific problems encountered during query execution or server operations.

When analyzing log entries, focus on patterns or recurring errors, which may indicate underlying configuration problems, resource limitations, or faulty queries. The following guide helps interpret common components within log entries:

- Timestamp: Indicates when the error occurred, aiding in correlating logs with specific query activities or server events.

- Error severity: Ranges from warnings to critical failures, guiding prioritization of issues.

- Error code: Numeric identifiers such as 1064 (syntax error) or 1146 (table does not exist) provide quick insights into the nature of the problem.

- Descriptive message: Offers context and details about the error, often including the problematic SQL statement or system component involved.

Below are sample log snippets with detailed explanations:

Sample Log Entry 1: Syntax Error

2024-04-25T14:32:10.543123Z 12345 [ERROR] 1064 - You have an error in your SQL syntax; check the manual that corresponds to your MySQL version for the right syntax to use near 'SELEC

- FROM users' at line 1- Error code 1064: Indicates a syntax error in the SQL statement.

- Message details: Highlights the specific part of the query causing the error, in this case, a misspelling of ‘SELECT’ as ‘SELEC’.

Sample Log Entry 2: Missing Table

2024-04-25T15:05:22.789654Z 12346 [ERROR] 1146 - Table 'mydb.users' doesn't exist- Error code 1146: Denotes a missing table error.

- Message details: Specifies which table was not found, prompting verification of database schema and table existence.

Sample Log Entry 3: Resource Limit Exceeded

2024-04-25T16:12:55.112233Z 12347 [ERROR] 1317 - Query execution was interrupted; max_execution_time exceeded- Error code 1317: Signifies that the query was interrupted due to exceeding execution time limits.

- Message details: Indicates the need to optimize the query or increase server timeout settings.

By systematically analyzing these log entries, database professionals can pinpoint query issues effectively, make informed adjustments, and improve overall database performance and stability.

Employing MySQL Query Profiling Tools

Effective debugging of SQL queries extends beyond syntax and logic; understanding how queries perform during execution is crucial for optimization. MySQL provides robust profiling tools that enable developers and database administrators to analyze the internal execution process, identify bottlenecks, and optimize query performance. Leveraging these profiling features can lead to more efficient database operations and a faster, more responsive system.

Using MySQL query profiling tools involves activating profiling features, generating detailed reports, and interpreting the data to pinpoint performance issues. These tools offer granular insights into each stage of query execution, such as parsing, optimization, and data retrieval, making them invaluable for diagnosing complex performance problems.

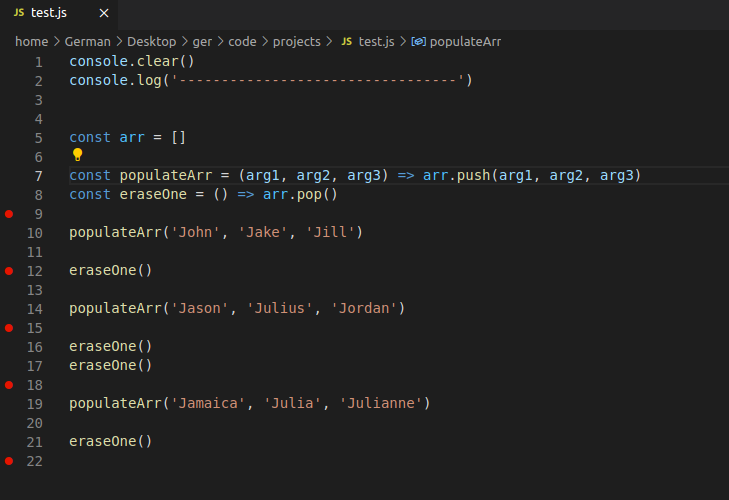

Activating and Using MySQL Profiling Features

To utilize profiling tools effectively, it is essential first to enable profiling within the MySQL session. This activation can be achieved through specific commands that prepare MySQL to record performance data during query execution. Once enabled, subsequent queries can be profiled to gather detailed insights into their execution plans and resource consumption.

Follow these steps to activate profiling:

- Execute the command

SET profiling = 1;

to turn on profiling for the current session.

- Run the desired SQL queries that require performance analysis.

- Retrieve the profiling information using the statement

SHOW PROFILE [FOR QUERY n];

, where n is the query number recorded in the session.

Additionally, for comprehensive analysis, the command

SHOW PROFILES;

provides an overview of all queries executed during the session, including execution times and query identifiers.

Generating, Viewing, and Analyzing Profiling Reports

Profiling data, once collected, can be examined to identify parts of the query that consume the most resources or take the longest to execute. MySQL offers commands to generate detailed reports that include information such as CPU usage, block I/O, and query execution steps.

The process involves:

- Executing

SHOW PROFILE ALL FOR QUERY n;

to obtain exhaustive profiling details for a specific query, which includes individual phases like parsing, copying to temp tables, sorting, etc.

- Using the output to pinpoint stages that are potential bottlenecks. For instance, if the sorting phase consistently takes longer, optimizing the ORDER BY clause or indexing relevant columns could improve performance.

- Analyzing cumulative profiling data, which helps in understanding patterns over multiple queries and identifying systemic issues affecting overall database performance.

Some tools also allow exporting profiling data into external formats (e.g., CSV) for further analysis or visualization using third-party tools, enhancing the ability to interpret complex performance metrics.

Identifying Bottlenecks in Query Execution

Profiling data is instrumental in revealing bottlenecks that hinder efficient query execution. Key indicators include high execution times in specific phases, excessive CPU utilization, or disproportionate resource consumption relative to query complexity.

By closely examining profiling reports, developers can recognize patterns such as:

- Repeated full table scans that suggest missing indexes.

- Extended sorting or temporary table creation, indicating inefficient joins or ordering clauses.

- High I/O wait times, pointing to disk bottlenecks or insufficient memory allocation.

For example, if a profiling report shows that a query spends significant time in the ‘Sending data’ phase, it may signal that the query retrieves more data than necessary, prompting refinements like adding WHERE conditions or limiting result sets.

Ultimately, the insights gained from profiling enable targeted optimizations, leading to faster query execution, reduced server load, and improved overall database responsiveness.

Common Debugging Techniques and Best Practices

Effective debugging of SQL queries is essential for optimizing database performance and ensuring accurate data retrieval. This segment delves into proven procedures for isolating problematic query segments, testing individual components systematically, and adopting best practices to streamline the debugging process. By applying these techniques, database administrators and developers can efficiently identify and resolve issues, minimizing downtime and enhancing query reliability.

Debugging SQL queries requires a structured approach that emphasizes both analytical precision and iterative testing. Isolating problematic segments involves breaking down complex queries into manageable parts and analyzing each component independently. Testing query components separately allows for pinpointing specific conditions or joins that may cause errors or performance bottlenecks. Following a set of best practices ensures a disciplined debugging process, reducing trial-and-error and fostering a deeper understanding of query behavior.

Procedures to Isolate Problematic Query Segments

Isolating specific parts of a complex SQL query can reveal which segments contribute to errors or inefficiencies. The process involves breaking down the query into smaller, more manageable parts and testing each in isolation. This method helps identify whether issues stem from JOIN conditions, WHERE clauses, subqueries, or aggregation functions.

- Start by removing parts of the query sequentially, such as comment out sections or simplify SELECT statements to focus on individual columns.

- Test each segment with sample data to observe their behavior and identify where the results deviate from expectations.

- Use temporary tables or views to materialize intermediate results, allowing for step-by-step validation.

- Leverage diagnostic commands like

EXPLAIN

to analyze execution plans of specific query segments, helping identify costly operations or missing indexes.

- Pay attention to complex conditions or nested subqueries that might be impacting overall performance or correctness.

Methods to Test Query Components Individually

Testing individual components of a query provides clarity about their functionality and helps isolate errors or inefficiencies. This approach involves executing parts of the query independently to observe their outputs and behaviors, which is particularly useful when dealing with multi-join or nested queries.

- Extract and run subqueries separately to verify their correctness and the data they return.

- Use

LIMIT

clauses to restrict output during testing, making it easier to review results quickly.

- Replace complex expressions with static values temporarily to confirm whether the logic is functioning as intended.

- Check individual conditions in WHERE clauses by executing simplified queries with specific filter values.

- Utilize temporary variables or session variables to analyze intermediate calculations or data transformations within larger queries.

Structured List of Best Practices for Iterative Debugging

Adopting best practices during the debugging process promotes efficiency, reduces errors, and enhances understanding of query behavior. The following structured approach ensures a systematic, disciplined, and effective debugging workflow:

- Reproduce the Issue: Confirm the problem consistently appears with specific data or conditions before troubleshooting.

- Simplify the Query: Reduce complexity by removing unnecessary parts, focusing on the core logic that causes the issue.

- Isolate Components: Break the query into smaller segments and test each independently, as Artikeld earlier.

- Use Diagnostic Tools: Leverage

EXPLAIN

, error logs, and profiling tools to gather insights into query execution plans and potential bottlenecks.

- Iterate and Refine: Make incremental modifications, validate results, and gradually reintroduce complexity, observing the impact at each step.

- Document Findings: Keep track of what changes have been effective, common issues identified, and solutions applied for future reference.

- Optimize and Validate: Once issues are resolved, review the query for optimization opportunities, such as index usage or query restructuring, and test performance improvements.

Following these procedures and best practices ensures a disciplined, thorough, and efficient approach to SQL query debugging, ultimately leading to more reliable and performant database systems.

Optimizing Slow-Running Queries

Efficient database performance is crucial for maintaining a responsive user experience and minimizing server resource consumption. Identifying and optimizing slow-running queries plays a pivotal role in achieving this goal. By systematically analyzing query execution, database administrators can pinpoint bottlenecks and implement targeted improvements to enhance overall performance.

Understanding the processes involved in detecting slow queries and applying effective optimization techniques allows for more efficient database management. This section explores procedures for locating slow queries, compares common optimization strategies, and discusses methods to rewrite queries for improved efficiency in MySQL databases.

Identifying Slow Queries Using Performance Schema or Logs

Detecting slow queries involves monitoring and analyzing the data generated during database operations. MySQL provides built-in features such as the Performance Schema and slow query logs that facilitate this process. Proper utilization of these tools enables DBAs to locate queries that adversely impact performance.

To effectively identify slow-running queries, it is essential to enable and configure MySQL’s performance monitoring features. The slow_query_log captures detailed information on queries exceeding a specified execution time, allowing for targeted analysis. Meanwhile, the Performance Schema offers real-time metrics and detailed insights into query execution patterns, resource consumption, and indexing efficiency.

- Enable the slow query log by setting

slow_query_log = 1in the MySQL configuration file.- Configure the

long_query_timeparameter to define the threshold for slow queries, e.g.,long_query_time = 2seconds.- Use the

SHOW SLOWcommand or query themysql.slow_logtable to review slow query records.- Leverage Performance Schema tables such as

events_statements_historyandevents_statements_summary_by_digestfor detailed analysis.

Regular monitoring of logs and schema data helps identify consistent patterns, frequently slow queries, and potential areas requiring index optimization or query rewriting.

Comparison Table of Before-and-After Optimization Techniques

Applying optimization techniques can significantly reduce query execution time and improve system throughput. The following table illustrates typical improvements achieved through common query optimization strategies, highlighting the differences before and after applying these techniques.

| Aspect | Before Optimization | After Optimization |

|---|---|---|

| Query Structure | Using SELECT

|

Specifying only required columns reduces data volume, e.g., SELECT id, name FROM table. |

| Index Usage | Queries lack proper indexing, resulting in full table scans. | Creating relevant indexes on frequently queried columns enables faster lookups. |

| Joins | Using suboptimal join conditions causes nested loops and slow execution. | Using proper join order and index-based joins reduces computation time. |

| Query Logic | Using inefficient conditions or redundant filters increases execution time. | Rewriting conditions, avoiding OR clauses, and leveraging derived tables optimize performance. |

| Execution Plan | No analysis; queries rely on default planner assumptions. | Using EXPLAIN to analyze plans and tuning queries based on insights. |

Adopting these optimization strategies can reduce query response times from seconds to milliseconds, thereby improving user experience and reducing server load.

Methods to Rewrite Queries for Improved Efficiency

Optimizing slow queries often involves rewriting them to leverage better execution plans, reduce resource consumption, and simplify logic. Here are effective methods for rewriting queries to enhance performance:

- Limit the Result Set: Use

LIMITclauses to restrict the number of rows returned, especially during testing or pagination. - Use EXISTS Instead of IN: For subqueries, replacing

INwithEXISTScan lead to faster execution, particularly when subqueries return large datasets. - Apply Appropriate Indexes: Ensure columns used in WHERE, JOIN, or ORDER BY clauses are indexed to facilitate quick data retrieval.

- Avoid SELECT

Explicitly specify only the columns needed to reduce data transfer and processing overhead.

- Rewrite Complex Joins: Simplify multi-table joins by breaking them into smaller, manageable subqueries or by restructuring query logic to minimize nested loops.

- Use Derived Tables or CTEs: When dealing with complex aggregations, Common Table Expressions (CTEs) or derived tables can simplify logic and improve readability, which may also enhance performance.

- Optimize WHERE Clauses: Write selective conditions and avoid functions on indexed columns that negate index usage.

For example, replacing a query like:

SELECTFROM orders WHERE customer_id IN (SELECT id FROM customers WHERE region='North');

with:

SELECT o.* FROM orders o JOIN customers c ON o.customer_id = c.id WHERE c.region='North';

This rewrite leverages index-based joins, often resulting in faster query execution.

Handling Deadlocks and Transaction Issues

Managing transaction concurrency is vital for maintaining database integrity and performance. Deadlocks occur when two or more transactions compete for resources, each waiting for the other to release locks, resulting in a standstill. Proper detection and resolution of deadlocks are essential for ensuring smooth database operations, especially in environments with high transaction throughput.

This section explores the methods to identify deadlocks within MySQL, presents typical deadlock scenarios, and discusses effective strategies to resolve and prevent such issues. Understanding these techniques enables database administrators and developers to maintain optimal system availability and data consistency.

Detecting Deadlocks in MySQL

MySQL provides mechanisms to detect deadlocks through its error logs and information schema tables. When a deadlock occurs, the InnoDB storage engine automatically rolls back one of the transactions involved to resolve the conflict. Detecting deadlocks involves monitoring for specific error messages and analyzing the information provided by system tables.

Key steps to detect deadlocks include:

- Examining the error log file for deadlock-related entries. MySQL logs deadlock errors with details about the transactions involved, the resources they hold, and the locks they are waiting for.

- Querying the

SHOW ENGINE INNODB STATUScommand. This command outputs a detailed report, including recent deadlock incidents and the involved transactions, locks, and queries. It is a primary tool for diagnosing deadlocks in real-time. - Monitoring the

performance_schemadatabase. Tables likeevents_statements_historyanddata_locksprovide insights into active transactions and locking issues, aiding in early detection.

Examples of Deadlock Scenarios

Understanding typical deadlock scenarios helps in designing queries and transactions that avoid such conflicts. Here are some common deadlock examples formatted in a table:

| Scenario | Description | Outcome |

|---|---|---|

| Two Transactions Updating Different Rows | Transaction A locks Row 1 and tries to lock Row 2; Transaction B locks Row 2 and attempts to lock Row 1 simultaneously. | Deadlock occurs when both Transactions A and B wait indefinitely for each other to release the locks. |

| Concurrent Updates on Parent and Child Tables | Transaction A locks a record in the parent table, while Transaction B locks a related record in the child table, both attempting to update linked records. | Mutual waiting occurs if foreign key constraints enforce locking across related tables, leading to deadlocks. |

| Long-Running Select Locking Resources | One transaction holds a shared lock on a table and a long SELECT query; another transaction attempts to acquire an exclusive lock. | The second transaction waits, potentially causing a deadlock if the first transaction is rolled back or held indefinitely. |

Resolving and Preventing Deadlocks

Implementing effective procedures to resolve and prevent deadlocks ensures database stability and performance. Strategies include:

- Analyzing Deadlock Reports: Use

SHOW ENGINE INNODB STATUSregularly to identify the transactions involved in deadlocks and understand the lock patterns that led to the conflict. - Optimizing Transaction Design: Keep transactions short and focused, and avoid holding locks longer than necessary. Commit changes promptly to free resources.

- Consistent Locking Order: Access tables and rows in a consistent order across all transactions to prevent cyclic wait conditions.

- Use of Indexes: Proper indexing reduces locking scope and duration, minimizing conflict potential during updates and reads.

- Implementing Retry Logic: In application code, detect deadlock errors (error 1213) and implement retries with exponential backoff to mitigate transient deadlocks.

- Applying Locking Hints: Use explicit locking strategies such as

LOCK IN SHARE MODEorFOR UPDATEappropriately to control lock acquisition and reduce contention.

Key formula: Minimize transaction scope & ensure consistent lock acquisition order to prevent cyclic dependencies leading to deadlocks.

Automating Debugging and Monitoring

Efficient database management relies heavily on proactive monitoring and automation to detect issues early and streamline the debugging process. Setting up automated tools to monitor MySQL performance, errors, and query execution enables administrators to maintain optimal database health with minimal manual intervention. This approach not only saves time but also ensures that potential problems are identified and addressed promptly, reducing downtime and improving overall reliability.

Implementing automated debugging and monitoring involves integrating specialized tools and configuring them to generate actionable alerts based on predefined performance metrics or error conditions. Proper interpretation of these alerts and logs is critical for timely troubleshooting and maintaining a stable database environment. This section explores the approaches to automate monitoring, interpret alerts effectively, and provides a sample configuration snippet for establishing automated debug workflows within MySQL.

Setting Up Automated Monitoring Tools

Establishing robust automated monitoring requires selecting appropriate tools and configuring them to track key database metrics and logs continuously. These tools can range from built-in MySQL features to third-party solutions, each offering varying degrees of customization and automation capabilities.

- Using MySQL Enterprise Monitor: Provides real-time insights into server health, query performance, and error logs with customizable alerts.

- Integrating with Prometheus and Grafana: Collects metrics via exporters like mysqld_exporter, visualizes performance dashboards, and triggers alerts based on thresholds.

- Implementing Nagios or Zabbix: Monitors server availability and resource consumption, with alerting mechanisms for anomalies or failures.

- Configuring Log Collection Tools: Centralizes error logs using ELK Stack (Elasticsearch, Logstash, Kibana) or Graylog for easy analysis and alerting.

Interpreting Automated Alerts and Logs

Automated tools generate alerts and logs that serve as early warnings for potential issues. Correct interpretation involves understanding the contextual significance of these signals and responding appropriately to mitigate risks.

- Analyzing Alert Severity: Categorize alerts based on severity levels—critical, warning, info—to prioritize responses.

- Correlating Data: Cross-reference alerts with historical logs or metrics to identify patterns, recurring errors, or resource bottlenecks.

- Identifying Root Causes: Use detailed logs and performance metrics to pinpoint underlying issues such as slow queries, deadlocks, or hardware failures.

- Documenting Incidents: Maintain records of identified issues and resolutions for future reference and continuous improvement.

Sample Configuration for Automated Debug Workflow

Below is a simplified example demonstrating how to set up an automated workflow using a combination of monitoring scripts and alerting mechanisms. This example illustrates configuring a cron job that checks MySQL error logs and triggers notifications for critical errors:

# Sample Bash script for monitoring MySQL error logs #!/bin/bash LOG_FILE="/var/log/mysql/error.log" ALERT_EMAIL="[email protected]" # Check for recent critical errors tail -n 50 "$LOG_FILE" | grep -i "critical" > /tmp/mysql_critical_errors.log if [ -s /tmp/mysql_critical_errors.log ]; then mail -s "MySQL Critical Errors Detected" "$ALERT_EMAIL" < /tmp/mysql_critical_errors.log fi

This script can be scheduled using cron to run at regular intervals, providing a hands-free way to monitor critical issues and notify relevant personnel automatically. More sophisticated setups can incorporate API integrations, centralized dashboards, and machine learning-based anomaly detection for enhanced automation and insight.

Last Recap

In conclusion, mastering the art of debugging SQL queries in MySQL database is a vital skill for database administrators and developers alike. Implementing the techniques discussed can lead to faster query execution, fewer errors, and a more reliable database environment. Continuous monitoring and refinement will ensure your database operates smoothly and efficiently over time.